10 Deployment & lifecycle maintenance

10.1 Operational deployment

Health data science projects often aim to produce tools that support decision making, through presenting insights or suggestions. Considering the implications of the intended use of your algorithm in practice is critical to success because if the model is used outside of its original intended use case, the subsequent decisions made based on the outputs may be invalid.

We recommend planning for this from the start of your project if operational deployment is one of your explicit goals, as there is a big difference between research and operationalising something. This area is sometimes called MLOps or ModelOps. Engage early with deployment experts, and involve the right mix of expertise for a successful implementation (see Keep subject matter experts involved throughout).

Some questions worth considering include:

- Who are the users, and what knowledge, capability, and/or training will they need?

- Is all the relevant data available at the point it is intended to be used, and is it current?

- Is it feasible to access ongoing operational data supply to support the model?

- How will models, decisions and/or insights be presented to the user?

- Can users understand how the model came to a conclusion and what action is required? (see Transparency, interpretability, and explanation)

- What monitoring, IT security and safeguards are necessary?

- What systems will be utilised, and who is responsible for them?

- Who will provide governance oversight of the model?

- Will the model be updated when new data becomes available?

- What exactly is expected to be deployed? An API? A frontend?

- How are the models and API going to be versioned?

- How much traffic are you expecting?

- How much response time and performance in time is acceptable?

- What level of work is required from warehouse developers when developing models? A simple model is more likely to be implemented than one which is complicated even if there is a performance decrease.

The list below outlines ideas and recommendations for operationally deploying machine learning solutions within the medical sector:

Draw clear lines between research, analytics and software development. If you’re looking to offer software as a service (SaaS), you’ll need software engineers who are familiar with software development practices (e.g. writing production-grade code, developing infrastructure)

Keep things simple: most prospective customers in the medical sector are conservative and need to understand (at least to some extent) solutions that are being offered to them. They’re also probably using outdated solutions (as opposed to cutting edge research)

Many prospective customers in the medical sector will be unwilling to send their data to an API over the public internet. You may need to specifically focus on the niche group of customers who don’t have these constraints, or you’ll need to develop solutions that can be deployed on premise. This has profound implications on your architectural decisions (e.g. federated learning), your ability to provide maintenance and support as well as your ability to keep tight control over your source code

Identifiable health data is regulated around the world. You need to ensure that you follow the right security practices for your clients to be able to use your services. This also has downstream implications on debugging, logging, and performance tracking

Much of the publicly-available health data that you may wish to deploy in production to train models have licences that forbid commercial usage – make sure you check these constraints before committing to a project

If you’re offering machine learning models to external clients which leverage open source data, you don’t need to regularly retrain these models, as you’ll most likely be using a static snapshot for a one-off training exercise. This simplifies model management

For IT implementation at partner and/or clinical sites, typically design, governance, and security sign-off will be required before people can be allocated to the work. This requires clear articulation of the benefits of the work in order to be prioritised. Ensure there is strong clinical engagement from the partner site to drive this (see End-user Engagement and collaboration)

Also refer to the Ministry of Health - Emerging Health Technology Advice & Guidance on operationalising algorithms

10.2 Lifecycle model maintenance

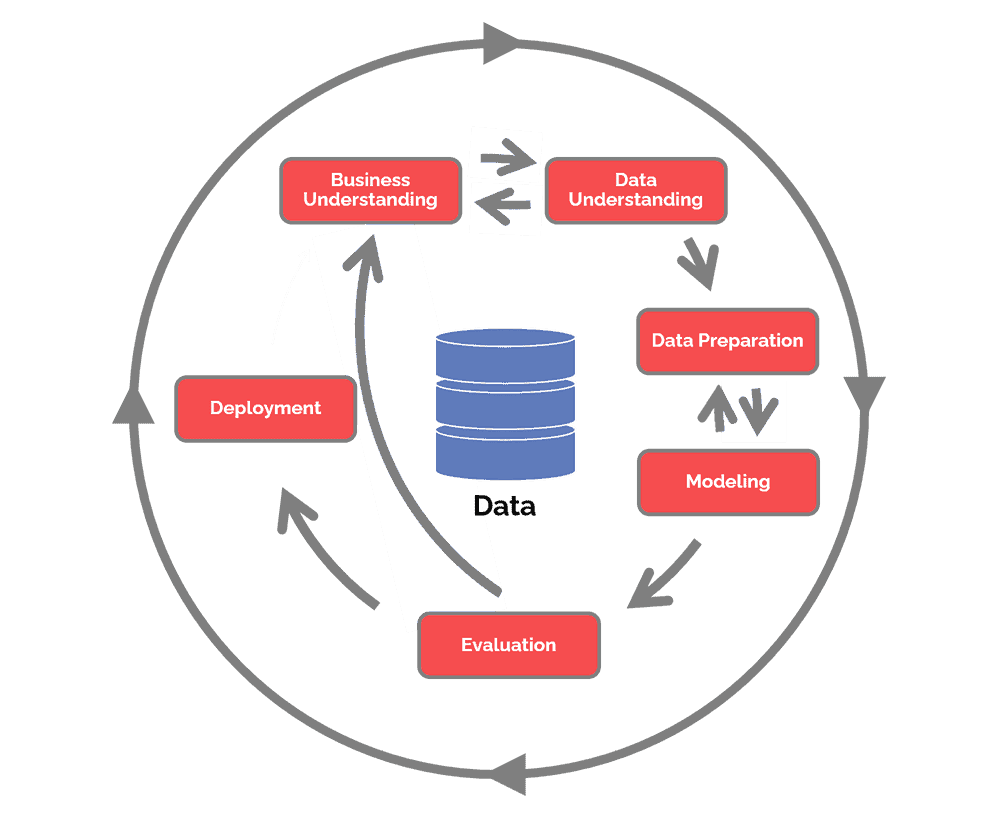

Deploying a model is never the end of modelling work. During its continuing life cycle, the model and its working environment need to be overseen continuously. Some early questions to consider are:

- What’s the governance process?

- Who’s responsible for oversight and/or maintenance?

- What’s the process for monitoring model drift?

- Is the model being used in the way it was originally intended, as outlined in the original use case?

- Do the intended users of the model understand, trust and use the model outputs?

In the case of “model drift” (which occurs when non-negligible performance decrease is detected, or any condition for the model to properly work is no longer satisfied), the model and model application need to be reviewed. Where necessary, the partial or whole modelling process should be reapplied to refresh the model.

Refer also to Transparency, interpretability, and explanation.

10.2.1 Model refreshment

There are two main approaches for triggering model refreshment:

Time based refreshment: Retrain the model (regardless of the performance) at a regular interval. Better quality data, more current data, data with better population coverage or new data sources may become available over time. This means that a model trained on updated data would likely have significant performance improvements over the existing model. A good understanding of how frequently the data changes is needed for this approach.

Performance-based refreshment: Continuously monitor a set of the model performance metrics (and/or other metrics such as bias) to determine when the model needs retraining. A good selection of the panel of metrics and thresholds is needed for this approach.

There are other considerations for model refreshment, including:

Any change in how the model is being used: Are the users of the model still using it according to the original use case? If the model is being used for a different purpose, is it appropriate for that purpose?

Potential for increased scrutiny of the model: You don’t want your model to be viewed negatively in public. You should consider how use of the model would look on the front page of the newspaper, particularly if there have been shifts in the inputs, in the outcomes, in the environment (such as new disease variants) or in attitudes that affect social licence.

10.2.2 Model monitoring

Key areas to be monitored during model use include:

The data feed: Are there any changes in the statistical properties of the data that are fed into the model, and the actual data labels or patient outcomes (there will be a lag to collect these) that the model predicts? Are they still similar to the data that the model was trained on? Has a “concept drift” detector been set up in the monitoring process? How is the bias in the data changing?

The model performance metrics and bias metrics: Is there any significant drop in the model performance or model fairness and what are the possible reasons to be taken into account for retraining? This could be when a given metric, such as accuracy or combination of metrics, such a sensitivity and specificity have dropped below a certain threshold, or there is a clear downward trend in those metrics.

The key assumptions that the modelling was based on: For example, with regard to COVID-19 pandemic modelling, is the current dominating virus variant still the same as the one when the modelling data were collected?

The workflow where the model is integrated: Are there any changes in the upstream or downstream steps of the workflow that may make the model less relevant?

The risk, benefit and cost of model usage: Does the benefit outweigh the risk in real use? Is the model use case as cost-effective as it was expected at design?

For a more comprehensive reference of model monitoring and retraining techniques, refer to A Practical Guide to Maintaining Machine Learning in Production.